A PLAN FOR SCHOOL IMPROVEMENT THROUGH AUTHENTIC ASSESSMENT

Or Write is Right

A. Select one or more improvement aims for your school.

Dear Dr. Raringtogo:

As you know, Samurai High School will soon spawn a new middle school, Samurai Middle School. As probable English department chairman, I propose that Samurai High Middle School adopt a version of the California-based Write Assessment as a means of measuring writing achievement and as a means of deciding which students achieve promotion to the ninth grade.

As a brand-new school, YMS currently has no mission statement and no goals. I propose the following as a mission statement: Samurai Middle School will provide the opportunities for students to learning how to become lifelong learners.

Such a mission statement suggests the range of opportunities, i.e. inputs, the school will provide. To support this, however, I suggest that one of the schoolís goals specifically mention measureable outputs in the area of writing. This follows the reasoning of Mike Smoker (1997), among others, who suggests that schools set only two goals, one of which involves student learning. Hence, as one goal, I suggest: Samurai Middle School will enable all students to obtain a basic level of competence in writing.

As you know, the general policy within DoDDs is social promotion for pre-high school students. Students, as a result, tend to view their middle school years as something of a time to play and socialize. Many devote their eighth grade year, in particular, to more entertaining pursuits than learning. In terms of writing, however, students must continue to strive and grow in order to prepare for the vigorous curriculum of Samurai High School.

For that reason, I propose that Samurai adopt the Write Assessment test as both a terminal exit examination and as an ongoing-assessment for the school. The Write Assessment represents an instance of authentic assessment. For 15 years, Los Angeles students had to pass the Write test in order to graduate from high school. Adoption of the Write will necessitate positive changes in teaching at Samurai Middle School and serve as a standard against which students can measure themselves and their progress at Samurai Middle School.

If this program proves successful, it can serve as a model for DoDDs-Japan or even the entire DoDDs system. The attached paper explains the rationale behind adopting the Write.

Please read the attached executive article and proposal.

Thank you for your time and attention.

Sincerely,

Daniel R. Fruit, M.A., M.S., MPA

B. Proposed Change Executive Summary of Supporting Documents

The following pages show the logic for adopting the Write Assessment at Samurai Middle School. The Write is an instance of authentic assessment, a performance measures that tries as much as possible to mirror tasks in the real world. In terms of writing, authentic assessment commonly refers to performance measures in which students write written responses to prompts followed by holistic scoring. Unlike tests such as the CTBS, authentic assessment writing tests measure against an absolute standard, not against the performance of other students.

The main arguments for authentic assessment deal with its greater "authenticity" and its criterion-reference grading. By simulating performances closer to the real world, authentic assessments offer teachers and students better feedback. By providing an absolute standard, authentic assessments allows students to measure their growth over time and against an "unmoving goal-post."

Critics of performance assessment point out some weaknesses that include the "subjectivity" of grading and the lesser breadth of skills tested. Specifically strategies can control this subjectivity. California and other states report success with authentic assessment. For teachers and students the feedback from "knowing how youíre doing," can provide motivation as well as measurement.

Currently, the two parent schools of Samurai Middle School, Samurai High School and Samurai Elementary, give students a number of tests which, either through usage or composition, donít rate the term of "authentic assessment." By using the Write, and providing abundant feedback, YMS can shore up a weak assessment program.

Having established the benefits of authentic assessment, this proposal suggests a program culminating with using the Write Assessment as a "high stakes" exit examination. Students would take the test in sixth, seventh, and twice in eighth grade. Passing students would enter regular ninth grade classes. Remedial programs would provide extra help to those who do not pass in middle school as well as providing a motivation to pass.

The institutionalization of the Write Assessment would, by design, give focus to the middle school program, in general, and the English program in particular. All classes, not just English classes, could practice administering prompts to students and familiarizing them with the format of the assessment. The accumulation of scores, over time, moreover, could provide a rough measure of how both students and teachers perform.

While this program might encounter initial community resistance, greater familiarity with the test, over time, should channel a lot of that anxiety into productive work and program improvement. The Write would become the central focus of the YMS curriculum.

The Write Assessment for Samurai Middle School

A. The Need for Assessment In DoDDs

Samurai Middle School is a new school. For that reason, itís opening represents an ideal opportunity to institute new practices, goals, and standards. Standards require evaluation. Recently, President Clinton himself spoke on the very subject of instituting DoDDs standards and measuring them. Clinton (1997, p. 1) acknowledged that DoDDs students come from "every racial and ethnic background" and "move from place to place" with their parents. Clinton found that:

"Because of this mobility, no group of students better underscores the need for common national standards and a uniform way of measuring progress than this group. If standards can work in these schools, they can work anywhere."

Lilian Gringes, director of DoDEA, which includes DoDDs, expanded on Clintonís remarks. She stated that in addition to academic programs, DoDEA must "commit itself to the long-term support of programs which strengthen these school systemsí ability to develop challenging standards and to help all children." She emphasized that:

"Only with a standard measure of excellence will be we be able to determine the success of our efforts to improve student performance, curriculum, and instruction. In keeping with the Presidentís memorandum of March 13, DoDEA will form a committee to determine the feasibility of aligning current standards with the National Standards. We will be coming to you, shortly, and asking your help with this very important endeavor."

Dr. Gringesís remarks show, again, an interest in instituting standards for DoDDs students. To those familiar with DoDDs, however, both Clintonís and Gringesís remarks might seem peculiar, even strange, as DoDDs students at all levels face a veritable battery of testing instruments that claim to measure student learning. In addition to teacher-written tests and examinations, students in the new middle school will take:

(1) the Terra Nova performance assessment test in grade 8, a mixed criterion-reference, short essay test

(2) the ASAP, a performance-based writing assessment, in grade 8

(3) the CTBS, norm-based, criterion-referenced tests, in grades 6 and 7

While graduating students ought to have, ideally, many skills, writing deserves selection as curriculum emphasis, at least initially. Students use this skill in all of their classes. Further, writing encourages higher order thinking skills. Also, the Write offers a proven means for measurement.

One might make the case that other skills merit just as much attention as writing. Surely, mathematical skills, for example, deserve as much attention as writing, the subject of this proposal. Writing, however, offers students a chance to develop and display critical thinking. Further, while many laud the importance of math skills, in reality students do not use them in all classes. Students, particularly with the adoption of the new "mathland" program, have to write in all four major academic classes. Beyond this, students have to write in the real world: college applications, resumes, letters, even email. Moreover, this proposal doesnít necessarily have to function as the only authentic assessment for the school. Rather, it might represent a point of departure for a thorough program of authentic assessment. Such a program, however, must begin somewhere, and writing seems a worthy place to begin and, with the Write, the means of assessment already exist.

Before delving into this, however, itís necessary to give some background on the subject of authentic assessment in general and more specifically, authentic assessment in terms of writing.

B. Authentic Assessment: What It Is

In the last ten years, a number of writers, among them Grant P. Wiggins (1993), Ruth Mitchell (1992), and Mark A. Baron and Floyd Boschee (1995) have advocated the use of "authentic" assessment. In order to understand their advocacy, however, itís important to understand the meaning of some key terms, including "authentic assessment." In some of the literature, authors also use the term "performance assessment." For clarityís sake, this paper will use only the one term.

In general, an "authentic" assessment endeavors to make the assessment as close as possible to real life situations. In fact, however, the use of the term "authentic assessment" as a discrete category of task causes some confusion because it leads to classifying some tasks as "authentic" and others "not authentic." A more reasonable way to categorize assessment employs rating tasks according to their authenticity. Tasks commonly termed "authentic" sit at one end of the scale while those with a more tenuous connection to the real world would sit at the other. A very "inauthentic task," for example, might be to do a sheet of math problems. Story problems rate further down the scale of authenticity, and going to the supermarket and performing calculations related to actually buying something anchors the opposite end. For the purposes of this analysis, "authentic assessment" will refer to tasks towards the authentic end of the scale below:

A Scale of Authenticity

INAUTHENTIC-ARTIFICIAL AUTHENTIC-REALISTIC [________________________________________________________________ ____}

math worksheet story problems store purchase

Baron and Boschee (1995, p. 8) offer the following list of characteristics of tasks exemplifying authentic assessment. Ideally authentic assessments:

". involve criteria that assess essentials, not easily counted (but relatively unimportant) errors

. are not graded on a "curve" but in reference to performance standards (criterion-referenced, not norm-referenced)

. involve de-mystified criteria of success that appear to students inherent in successful activity

. make self-assessment a part of the assessment

. use a multifaceted scoring system instead of one aggregate grade

. exhibit harmony with shared school-wide aims-a standard."

Grant P. Wiggins (1993, p. 13) makes an important distinction between "testing" and "authentic assessment." An assessment is a "comprehensive analysis of performance" while a test is merely "an instrument, a measuring device." A test, then, may be an authentic assessment or it may not; an authentic assessment may or may not be a test.

This paper will show that Samurai Middle School needs to adopt an assessment for writing as "authentic" as possible that is also a test, i.e. something that will yield measurable data. A number of authentic assessment writing tests exist. They all use "prompts," "rubrics," and "anchors," three other terms that need defining.

"prompt" supplies a written stimulus for writing. It

provides the English equivalent of a math story problem. A wellwritten prompt allows ALL students to respond, regardless of ethnicity, past life experiences, etc. since the assessment aims to measure the skills of writing. The following is an example of a prompt used on the CAP (Mitchell, pp. 31-32) test:

"Your English teacher has asked you and other students in your class to help select literature for next yearís grade eight classes. Think about all the works of literature-stories, novels, poems, plays, essays youíve read in your English class. Choose the one you have enjoyed most.

Directions for Writing: Write an essay for your English teacher recommending your favorite literary work. Give reasons for your judgment as to why other grade eight students should read this work. Tell the teacher why this work is especially valuable."

Machines, obviously, cannot score responses to a prompt. Instead, a group of scorers convenes and gives each paper typically two and sometimes three scorings using a "rubric" and "anchor papers" before arriving at a score that assigns a score based on the overall strength of the work.

A "rubric" is a list of characteristics that distinguishes a certain level of performances. It functions in place of the "right answer" on tests such as the CTBS because a performance evaluation allows reporting multiple levels of performance instead of "right" and "wrong." Scorers weigh a written work against a list of characteristics in order to find a "best-fit." The Arizona State Assessment Program (ASAP) uses the following rubric in its scoring (Rudner p. 71):

"A Ď4í paper fully addresses the prompt and is written in a style appropriate to the genre assessed. It clearly shows an appropriate awareness of audience. The paper Ďcomes aliveí by incorporating mood, style, and creative expression. It is well organized, containing sufficient details, examples, descriptions, and insights to engage the reader. The paper displays a strong beginning, a fully developed middle, and an appropriate closure.

A Ď3í paper addresses the prompt and is written in an appropriate style. It is probably well-organized and clearly written but may contain vague or inarticulate language. It shows a sense of audience but may be missing some details and examples. It has incomplete descriptions and fewer insights into characters and topics. The paper may show a weak or inappropriate beginning, middle, or ending.

A Ď2í paper does not fully address the prompt, which causes the paper to be rambling and disjointed. It shows little awareness of audience. The paper demonstrates an incomplete or inadequate understanding of the appropriate style. Details, facts, examples, or descriptions may be incomplete. The beginning, middle, or ending may be missing.

A Ď1í paper barely addresses the prompt. Awareness of audience may be missing. The paper demonstrates a lack of understanding the appropriate style. The general idea may be conveyed, but details, facts, examples, or descriptions are lacking. The beginning, middle, or ending is missing.

An "anchor" paper gives a concrete example of a given level of performance. Before an evaluation session, scorers look at anchor papers representing the entire spectrum of possible scores and practice rating them. This heightens the reliability of the scoring session.

One other important term, "validity," requires special definition as it pertains to authentic assessment. As any statistics 101 student can explain, a "reliable" test returns the same results consistently while a "valid" test measures what it claims to measure. An ideal test tries to attain both high validity and reliability at a reasonable cost.

The concept of "validity," however, takes on a new complexity when discussing authentic assessment. The advocates of authentic assessment claim a high validity (Wiggins 1989) because their tests come as close as possible to real world tasks, i.e. "real world validity." Those attacking authentic assessment (Hirsch 1989 and others) will claim authentic assessments lack validity because such assessments donít measure a range of skills supposedly analyzed by the test, i.e. "content validity." The question becomes one of "valid to what?"

Fortunately, however, because DoDDs already employs a variety of testing instruments, this debate hasnít a great importance to Samurai Middle School. The CTBS, for example, has a high degree of what Hirsch would term validity. That allows the application of the standard of "real-world" validity to the authentic assessment instruments, the Terra Nova, the ASAP, and the Write. Further, a fair amount of evidence indicates that authentic assessments also contain a high degree of content validity (Mitchell 1992).

Having defined the terms of discussion, itís possible to examine the good and bad points of authentic assessment testing.

C. The Advantages of Authentic Assessment

A meta-analysis of the literature considering authentic assessment shows most authors favor authentic assessment tests as a replacement for or supplement to traditional, norm-referenced based testing. Scholars in favor include Baron and Boschee (1995), Darling-Hammond (1991), Gusky (1996), Madaus (1991), Mitchell (1992), and Wiggins (1993) among others. Of these authors, Wiggins enjoys a considerable reputation as an expert on the subject of assessment. Gardner, the inventor of the concept of "multiple intelligences," supplies a psychological foundation for the concept. Ed Hirsch (1989), in contrast, brings up some importance criticisms of this assessment method explored in the next section. These pro-authentic assessment authors, in general, find the following four advantages summarized by Baron and Boschee (1995, pp. 9-10):

(1) Authentic assessment techniques measure directly what educators want students to know.

(2) Authentic assessment techniques emphasize higher order thinking skills, personal judgment,

and collaboration.

(3) Authentic assessment urges students to become active participants in the learning process.

(4) Authentic assessment allows and encourages teachers to "teach to the test" without destroying validity.

These points bear some discussion, but I would add a fifth important point:

(5) Evidence shows authentic assessment improves learning.

The first point seems almost axiomatic: if you design a test to fit the real world, it should show students what they ought to learn. It presumes, however, that teachers have accepted that what is taught should find its validity in terms of the real world. At best, the literature provides much evidence that teachers should adopt this view (Baron and Boschee). In the area of writing, for example, few if any DoDDs students will ever encounter lists of sentences underlined and need to identify the letter of the correct one. On the other hand, most students will need to write business letters and autobiographical sketches (on their resumes) tested by the ASAP, Write, and CAP. Hence authentic assessments better match real world tasks.

Hirsch, again, questions the second claimed advantage: that authentic assessment measures higher-order thinking skills. Other authors agree with Baron and Boschee (1995) that authentic assessment tests measure higher order thinking skills at least as well as, if not better than, norm-based referenced tests. As the above sample prompt and rubrics show, authentic assessment problems typically involve tasks that Bloomís taxonomy would classify as evaluation, synthesis, and application. Again, however, since DoDDs also administers the CTBS, even if Hirsch has a point, itís not a particularly important one.

The third advantage relates to the subject of "feedback." In traditional norm-based reference tests, such as the CTBS, studentsí parents receive a "score" months later. Since each test iteration differs from the previous one, students, at best, can find out the mistakes made only much later. This provides, at best, information only about what students didnít learn. Further, the CTBS "norms" scores, returning a percentile rather than an absolute test result, meaning a student merely knows how he performed compared to others, not how well he/she really did against an absolute standard. With DoDDs 1/3 turnover rate, the student can conclude very little about his performance and even less about his/her improvement over time.

Itís possible, and unfortunately DoDDs practice in terms of the ASAP, to administer an authentic assessment in nearly the same fashion. Generally, however, students and teachers preparing for performance-based assessment, practice the kinds of problems tested beforehand. Wiggins terms such practice a "rehearsal." Since performance-based assessment has a high degree of real world validity, even the rehearsal has an inherent value, but thereís more: Since authentic assessment tests have an absolute, not a relative, performance scale, students can practice, see improvement, and continue to practice, a powerful enhancement to motivation.

The subject of "rehearsal" brings out to the fourth key advantage: "teaching to the test." Good teachers have always written their lessons in conjunction with the evaluation. Baron and Boschee, Mitchell, and Wiggins all point out that thereís nothing wrong with teaching to the test if the test is worth taking, meaning it tests skills amply worth knowing. With the Write and ASAP, properly administered, not only teachers, but students, can look beforehand at their work and see areas in need of improvement. The test, then, becomes no more than a final validation of what students are in the process of learning about their own performance at tasks useful in the real world. The test becomes, not a foreign instrument but an integral part of the curriculum, which has certain implications explored later in this paper.

The fifth point is, in fact, in many ways more important than the first four. To discuss the concept of "real world validity" without examples of real world success would invite more than a little cynicism. Individual states and school districts have had "authentic" success with authentic assessment, in terms of measurable gains in student learning. Rubrics have been proven to provide better feedback because they clarify the criteria for evaluating student work (OíNeil 1994). Two studies by Hillocks (1987) and Csikszentmihalyi (1990 in Schmoker) have found the people work more productively if they have clear targets. California successfully improved writing with the CAP (Mitchell) and only discarded it for budget reasons. Maryland, after a decade (quoted in Schmoker) found that the percentage of students meeting state writing proficiency goals rose from 47 to 90 percent. Altogether, 27 states felt impressed enough to follow California (Mitchell, p. 43) in instituting authentic assessment writing tests.

One might reasonably argue that Maryland students only learned to write better on one test, but if that test has strong, real-world validity, then studentsí success has inherent value, other issues aside. To argue that students who do well on Marylandís MSAP donít really "know how to write" is rather like arguing that students who finish driversí education donít really understand the automobile, its mechanics, its engine. All this may be true, but in the real world the vast majority will be driving the car, not explaining its mechanical intricacies. Arizona educators (in Mitchell, p. 40) compared their normreferenced ITBS and TAP to their ASAP-like ESD and found that the latter test covered 100% of the contents of the former while the multiple guess tests covered only 26-30% of the items on the ESD. So the authentic assessment provided the far better measure of studentsí writing skills.

Authentic assessment, then, has some obvious advantages, but it also has some disadvantages worth considering.

D. Performance-Based Assessment: Some Disadvantages

Baron and Boschee (1995, p. 11) point out three important disadvantages of authentic assessment.

These also figure into Hirschís (1989) defense of traditional, norm-based tests, such as the CTBS, against the advocates of authentic assessment. Key disadvantages include:

(1) high cost

(2) difficulty in making results consistently quantifiable, objective, standardized, and aggreggateable

(3) undemonstrated validity, reliability, and comparability of the more subjective scoring systems and their results: The key issue remains subjectivity in evaluating performances.

The key cost consideration involves the use of human scoring. Scoring requires training, and training costs money. In fact, though, DoDDs already trains teachers to score the ASAP tests. Itís possible to have the same scorers score the Write Assessment. Given that a scoring group scores each essay in about 30 seconds, and each receives 2 scores, and each paper contains 2 essays, that means the average Write Assessment takes 3 minutes to score. All essays from Samurai Middle School, then, would take a total of only 8 man hours to score. Even assuming $40/hour of release time and training, scoring an entire school would cost only $400 and take roughly a morningís work. The calculations appear below.

2 essays * 1/2 minute each * 2 scores = 2 minutes + 1 minute for transportation = 3 minutes

3 minutes/student * 150 students = 450 minutes = about 8 man hours

8 man hours * about $40/hour = $320

2 man hours (training) * about $40/hour = $40

total = $320 + $40 = $400

Further, the training for scorers represents a benefit as well as a cost because it teaches teachers how to perform holistic scoring, which they can carry over into their classes. Los Angeles, traditionally invited teachers from all disciplines to score the Write on the assumption this would encourage writing in other disciplines. Significantly, the district found no difficulty in training teachers in other disciplines to score the tests. The training would support DoDDs efforts to promote writing across the curriculum. Adopting the Write Examination at Samurai Middle School represents one of the better "bang for buck tradeoffs" in the history of DoDDs.

The second point returns to the subject of reliability and, in the end, logistics. Hirsch (p. 184) points out, correctly, that scorers found that even after lapse of a weekend they needed to engage in Ďre-calibrationí sessions (Hirsch, p. 184). He also cites a familiar case (Starch and Eliot, 1912, 1913a, 1913b, cited in Guskey) in which 300 different teachers scoring a given paper mailed to them gave that paper grades that ranged from a fail to an "A." As Wiggins (1993) points out, teachers scored this paper with no context. Designers of performance evaluation assessments have gone to great lengths to avoid exactly this problem through calibration sessions, anchor papers, and rubrics. Given that three Samurai Middle School or Samurai High School teachers, properly trained, could rate all the Write tests within a single morning, itís possible to obtain a high degree of reliability. This author found that after not grading a authentic assessment test for ten years, he could still successfully rate all ten anchor papers correctly at a practice ASAP session. Chelsea School District, scoring a similar authentic assessment found a 70% inter-reliability (Paratore, 1997).

The third criticism turns to subjectivity. Much has been written regarding the "subjective nature" of authentic assessment and the equally subjective nature of norm-based reference tests. Again, the calibration sessions, anchor papers, etc. all aim to limit the subjectivity. In the end, however, human beings rate the paper. As many authors point out, though, norm-based tests have a different kind of subjectivity related not to the grading of answers but to the selection of questions (Wiggins 1989). Since DoDDs uses multiple measures of assessment, DoDDs can actually compare the two. Moreover, itís important to remember that authentic assessment measures its validity in terms of the real world. Almost every writing situation in real life requires a subjective evaluation by the evaluator, including letters of complaint, college applications, and job resumes. Even if authentic assessment doesnít yield similar results to the CTBS, the results may have real value, particularly given the advantages outlined above.

In general, then, the advantages of authentic assessment outweigh its disadvantages and argue that Samurai Middle School should adopt some version of authentic assessment. Ironically, as the next section explains, Samurai Middle School students donít lack for writing assessments tests, but the writing assessments lack for authenticity.

E. Whatís Wrong With the Terra Nova, the ASAP, and the CTBS?

Currently, Samurai High School and Samurai Elementary School use the CTBS, the ASAP, and the Terra Nova for middle school age students. The CTBS, a traditional norm-referenced test, taken by 6th and 7th graders, sits at the left end of the authenticity scale. It assesses writing, at best, indirectly. It shares all of the disadvantages of traditional, norm-referenced tests. Further, students take it only in grades 6-7.

DoDDs adopted the Terra Nova specifically to overcome some of the weaknesses of the traditional CTBS test. The Terra Nova includes multiple choice sections, short answer, and short essay. The Terra Nova, however, suffers from its inclusiveness. Some sections on science, social science, and math include a writing score. While this fits in with the philosophy of writing across the curriculum, it yields inferior feedback to a test that only tests writing and may suggest deficiencies in the other disciplines where none exist.

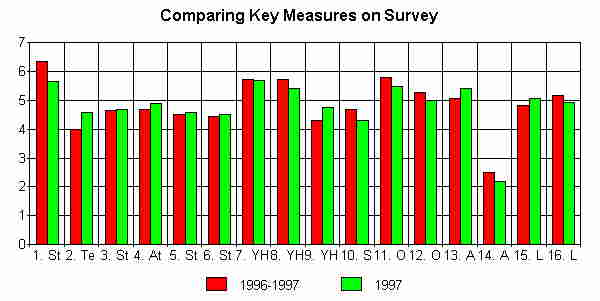

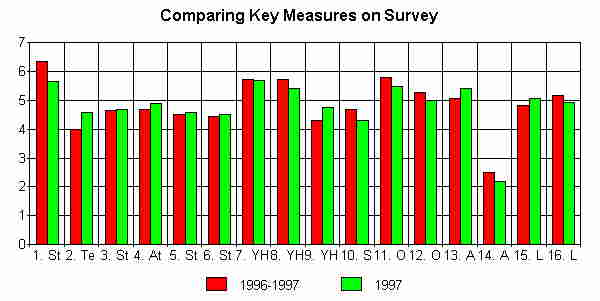

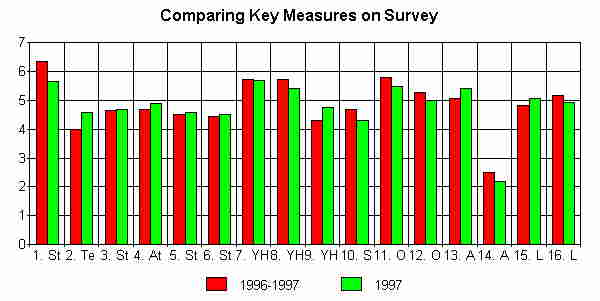

The ASAP test offers a good example of authentic assessmentmisused or "nearly authentic assessment." Students have a chance to follow the writing process. The exam occurs over several day. It uses prompts, rubrics, and anchors. Students have to write in domains that include (1) a friendly letter (2) a business letter (3) an explanation (4) a personal opinion (5) a story. Each class receives a set of prompts that cover all of the domains. Students receiving the same prompts then have an opportunity to discuss. A second and third day offer ample opportunities for students to follow the writing process. The ASAP yields a wealth of data-to DoDDs-but not to students who do not receive their scores. Even if they did receive this data, further, the matrix system precludes direct comparison. In other words, the ASAP lacks two of the characteristics of authentic assessment: student feedback and teacher motivation to "teach to the test." The chart below shows the three tests in terms of authenticity:

A Scale of Authenticity

INAUTHENTIC-ARTIFICIAL AUTHENTIC-REALISTIC [_____________________________________________________________}

the CTBS Terra Nova ASAP test Write

According to Grant Wiggins, the "student is the primary client for all assessment." DoDDs, in all three cases, fails notably in serving that client. Students never receive their ASAP scores. Samurai High School and Elementary send out CTBS test results-to parents. Another set goes into student files. The same will happen with the Terra Nova. No important decisions that effect students rely on the test results.

This raises a fundamental question, often asked during these tests by students, particularly during the third day of the ASAP: Who cares?

This leads to a related question: If students take a test with no motivation for doing well, will the results yield any useful data let alone incentive to learn?

Logic, and years of administering tests, would suggest that these tests are not very useful in determining the level of learning of students in any area, including writing, and even less useful in encouraging their learning. Observation shows most students treat the three tests above as simply an annoyance or a somewhat interesting break in routine.

F. Why the Write Test and Why High Stakes?

Given DoDDs current testing situation, it seems a bit ludicrous to propose administering yet another test. Yet the Write, and the suggested manner of implementation, differs in important ways from the tests and testing situations currently employed by DoDDs.

Unlike the ASAP, the Write takes place in a single setting. Students receive two prompts that cover the following domains: (a) business letter (b) friendly letter (c) reasons for an opinion (d) description (e) autobiographical incident and (f) explanation. This represents a reasonable spectrum, as realistic as the ASAP. In the 90 minute session, students respond to two prompts. All students write to the same prompts. With ninety minutes, students have some time to prewrite and rewrite, but not an enormous amount. Each student receives a score between 1-6 similar to the CAP scores above with a "0" reserved for blank papers. Unlike the ASAP, students cannot discuss with one another, a point that might make it less "authentic" but better able to distinguish individual performance.

A key component of the Write, as used in Los Angeles, involves consequences. Students need a "passing" score to graduate from high school. To pass, a student needed an "8." Scorers arrived at that score by simple addition. Most students, including Los Angeles minority population take, and pass, the test in 9th grade. Students who donít receive a passing score retake the Write in 10th grade. Those who fail take it again in 11th grade. A senior can take the test two more times as well, so that students take the test as many as five times. Students, though, must pass that test to obtain a diploma. This provides students with a very strong motivation to do well on that test and very strong motivation for teachers, particularly in the 9th grade, to prepare them to do well. In a district of 100,000 of many ethnic groups, only about 1% didnít graduate due to failing the Write. This leads to the subject of motivation.

Many authors have written on the subject of motivation. As Madaus (Madaus, p. 228) observes "there is little empirical evidence about the motivational effect of tests with important, but rather abstract, rewards or sanctions on young children from very diverse backgrounds." (Madaus, p. 228). The key to Los Angeles Unifiedís implementation of the Write test was that rewards and sanctions were extremely concrete and meaningful. This makes the Write, as used in Los Angeles, even more authentic because it brings about an important point: In the real world, when adults write, there are always potential consequences or writing would not take place.

This use of high stakes differs considerably both from the current eighth grade curriculum and the tests discussed above. Students have no incentive to do well on those tests as they provide nothing to the student-and take nothing away. The same case might be made for social promotion: it encourages students

not to perform.

For that reason, this paper proposes that students take the Write at the end of each year at Samurai Middle School and as a terminal exit exam for Samurai Middle School. Though, again, LAUSD used this test primarily in the ninth grade, DoDDs students at Samurai generally have a better education, comparable to a stateside middle class neighborhood, justifying a tougher standard. Students who donít pass the by the end of 8th grade would automatically enroll in a mandatory intensive writing class to take place the summer before they start high school and undergo re-testing at the end of that session. Any who failed again would take an intensive writing class the first semester of 9th grade.

This proposal will yield the following advantages to Samurai High School:

(a) The Write will yield immediate data as to students writing progress absolutely and in comparison to other students.

(b) The Write will provide students with an incentive to

learn how to write.

(c) The ASAP will find its place, quite properly, as a rehearsal for the Write and the data it yields will then have some value.

(d) The Write will set a minimum standard for promotion and a strong foundation for high school work.

(e) The Write will require positive changes in the curriculum.

(f) The Write will provide performance data about the school as a whole.

G. The Curriculum and The Write Test

One of the strengths of authentic assessment is that it encourages students to "teach to the test." Maryland, with its long experience with tests of writing, responds to this: "If the outcomes are worth spending time on, if the tasks really are demonstrations of understanding...then thatís what we ought to be teaching to."

In a high stakes situation, students and parents will demand that teachers prepare their students for the Write. In reality, the Write tests the forms of writing typically taught by teachers in grades seven and eight. What will likely happen is that English teachers will decide which domains to teach in grades 67, but 8th grade teachers will assume responsibility for final preparation. Teachers will hold frequent practice sessions, particularly in grade eight, to assure the highest percentage pass.

In this way, students will develop an acute awareness of how well theyíre doing and how much they have to improve in order to reach (and hopefully surpass) that magic "eight." They will also become quite sharp at judging good and poor writing. This author found his own students, after a year of test preparation, as able to holistically judge papers as most professionals.

Moreover, as a review of the domains above suggests, other departments can easily supply prompts that fit their disciplines, leading just to the kind of "writing across the curriculum" projects done in top middle schools across the United States and advocated by DoDDs. California, after instituting the CAP, found 94% (quoted in Mitchell) of all teachers assigned a greater variety of writing and 78% a greater quantity.

In fact, then, the Write will to a large extent become the centerpiece of the Samurai Middle School curriculum in much the same way the I.B. works in good international schools as a driving force for achievement. Further, again, it may lead to suggestions for school-wide authentic assessments that test other necessary skills, such as mathematics.

Thereís another possible advantage and consideration regarding the Write. If the school keeps the data regarding the scores, it can measure the relative achievements of not only students, but the school itself. While teachers and union would likely oppose basing their performance ratings on such scores, the differing schools might choose to publish them in DoDDs publications in the spirit of healthy school competition. After all, DoDDs does publish scores for sporting events. This might serve as a positive incentive to the everyone involved.

H. What if They Fail the Test?

To kids and their parents, this question will loom very large. Some students will fail, but probably far fewer than suspect they will as the figures above suggest. Long hours of practice and frequent rehearsals, moreover, will mean that failure will come as no surprise. The consequences outlined above, essentially losing a summer, will seem very odious to students but beneficial as feedback to them and to their parents. The aim of instituting the test, though, is not to identify failure but to inspire kids not to accept failure.

As to the summer school class, this represents something of an investment plan. Each year, Samurai High School tries to offer a summer school class. No students sign up. The Write Assessment would guarantee at least one class of students to take advantage of this opportunity and with a clear agenda in mind for that class.

I. Training

Currently DoDDs provides ample training for the Write but wastes it. Each year, DoDDs pays teacher to come to Washington and score the ASAPs (whose scores disappear). The exact same training in holistic scoring works for the Write test as well. Enough teachers have now scored the ASAP that this group could easily function as the "expert group" to train the few teachers needed to score the Write. In the long run, for reasons suggested in the next section, most teachers will learn to score Write essays.

J. The Parents

This group will present the greatest challenge, at first, to the proposal. Parents, particularly officers, will fear their students will fail. Quite likely, given the greater attention these families pay to education, a single yearís iteration will show their fears somewhat exaggerated. Then, these parents, typically advocates of "higher standards" will likely become the programís strongest supporters and advocates. The Write, after all, will provide them with a means of comparing their children to others.

A more difficult group to reach will be the parents of our lower-achieving students. Often their absence of concern translates into poor school performance. Here Samurai High School, with the support of a strong base commander, can make a firm stand: not registering any student who fails to either (a) pass the test (b) finish the summer school intensive writing course. Given the alternative between giving up a summer and homeschooling, parents will opt for the former. In any case, a strong relationship with the base commander is essential.

K. Conclusion

In conclusion, a considerable body of literature supports the idea of authentic assessment tests. The Write Assessment, particularly administered for "high stakes," fits the key characteristics of performance assessment: it provides feedback, encourages higher order thinking, makes students active participants, and promotes learning. By making passage of the Write a promotion requirement, Samurai Middle School can find a tight initial focus for its curriculum: writing. Students will quickly become interested in writing and in making their own writing better. Teachers, in all disciplines, will find ways to make writing more a part of the curriculum. Finally, parents and students will have a clear goal to guide the childís middle school years, a guide that focuses on learning.

BIBLIOGRAPHY

"Rubric for Accessing Student Writing." Arizona State Assessment Program, Arizona Department of Education, Phoenix, quoted in Rudner, Lawrence, Conoley, Jane Close, and Plake, Barbara. Understanding Achievement Tests: A Guide for School Administrators.

Baron, Mark A. and Boschee, Floyd. Authentic Assessment: The Key to Unlocking Student Success. Lancaster, Pennsylvania: 1995.

Clinton, Bill. March 13, speech to the North Carolina State Legislature as Quoted by Lilian Gringes, Volume I, Issue 4, 1997, p. 1.

Conoley, Jane Close, Barbara S. Plake, and Lawrence M. Rudner, ed. for ERIC Clearinghouse on Tests, Measurement, and Evaluation American Institutes for Research. Understanding Achievement Test: A Guide for School Administrators. The Catholic University of America: 1989.

Darling-Hammond, Linda. "The Implication of Testing Policy for Quality and Equality." Phi Delta Kappan 73, No 3. (Nov. 1991): pp. 220-225.

Earl, Lorna M. and Paul G. LeMaheiu (1997). "Rethinking Assessment and Accountability." in 1997 ASCD Yearbook: Rethinking Educational Change With Heart and Mind. pp. 149-168.

Fruit, D. (1993) The Effect of Social Environment on DoDDs Pacific Schools: Or the Little Company Town With the C-130s." Unpublished paper for Troy State University Class: Social Foundations of Higher Education.

Gardner, H. "Assessment in Context: The Alternative to Standardized Testing Practices: Options for Policy Makers." in Report of the Commission on Testing and Public Policy. B. Gifford ed. Boston: Kluwer Academic Press, 1989.

Gringes, Lilian. Volume I, Issue 4, May 1, 1997, p. 1.

Gusky, Thomas R. "Reporting on Student Learning: Lessons from the Past-Prescriptions for the Future.," ASCD 1996 Yearbook, pp. 1213.

Hirsch, E.D. Jr. The Schools We Need. Lanham Maryland: University Press, 1991, pp. 176-214.

Madaus, G. "The Effect of Important Tests on Students." Phi Delta Kappan 73, No. 3, November 1991, p. 230.

Mitchell, Ruth. Testing for Learning: How New Approaches to Evaluation Can Improve American Schools. New York: The Free Press., 1992.

OíNeill (1994) quoted in Schmoker, Mike. Results (1961): Introduction and Chapters 5-6.

Paratore, Jeanne, Ed D. Speech on "Creating Literacy Routines in Your Classroom," June 30, 1997.

Principalsí Training Center. "Assessing Student Learning," 1977, p. 77.

Schmoker, Mike. "Setting Goals in Turbulent Times." In 1997 ASCD Yearbook: Rethinking Educational Change With Heart and Mind. Ed. Andy Hargreaves. Alexandria Virginia: Association for Supervision and Curriculum Development, 1997, pp. 128-149.

Wiggins, Grant P. Assessing Student Performance. San Francisco: Jossey-Bass, 1993.

C. Create a school improvement process specifically adapted to the current context of your international school and the aims you have selected. (65% of the grade).

A. Introduction: Samurai Middle School, a Unique Opportunity

The opening of Samurai Middle School offers DoDDs a unique opportunity to design a new kind of school, a school imbued with a culture of change. Such a school would incorporate successful innovations as well as providing inspiration for other schools within the system.

Further, as will be explored in subsequent section, it would represent a radical departure for the Department of Defense Dependent Schools. A review of DoDDs history and an examination of its environmental reveals a system mired in conservatism and burdened with a change mechanism that research predicts will fail and does. If constructed correctly, YMS can give DoDDs a chance to try something new: a "continuous improvement school. " Before returning to DoDDs, however, itís first necessary to examine the subject of change and reform, in general, and effective school change in particular.

B. Change and Reform, Defining Terms

An aura of "political correctness" sometimes confuses the debate regarding school reform. Everyone from serious scholars (Fullan 1991) to President Clinton (1997) seems to advocate "reform," but there seems to be little agreement on what to do. Moreover, as Fullanís review shows, school reform suffers from a tortured history. Much touted movements such as "open schools" and "learning modalities" come and go; others such as Scooperative learning" merely return, repackaged, inviting teacher cynicism and re-enforcing the innate conservatism of most teachers (Lortie 1975).

Part of the difficulty with school reform stems from the way advocates use their terminology. To illustrate this, recall a famous Harry Truman story. When asked to define the difference between a "depression" and a "recession," Harry Truman once said: "a Ďrecessioní is when the other guy loses his job; a Ďdepressioní is when you lose your job." Part of the problem, then, involves the term "reform" itself. The terms comes loaded with positive connotations, the reason Fullan purposely avoids the title in his book on the subject. Americans have a proven cultural bias towards new things, typically termed "innovations." To term something a "reform" implies, automatically, some kind of positive change.

To cite a simple example, in his article on school reformers, Schlechty offers an interesting analogy of school reform as living on the frontier society with "trailblazers," "pioneers," "stay-at-homes," and "saboteurs." Though Schlechty points out that todayís saboteurs might well have been yesterdayís trailblazers, his language prejudices the readers against these people and re-enforces the typical tendency to see newer as better and change as reform. All too often, writers, teachers, and principals tend to view one another as sitting at one end or the other end of the scale shown below.

Figure 1: A School Reform Scale

SCHOOL REFORM

Over-enthused Advocate Resistant Reactionary

{-----------------------------------------------------------------------------------------}

Of course, in reality, any person or, any school, can sit at any point along the line. For that reason, itís vital to distinguish "reform" from "change." This means taking the position that any given educational alteration might prove a genuine success or a genuine failure. It has become common for schools to compare their organizations to businesses. Half of all businesses fail, resulting in a loss of jobs and capital. For a school to fail means much more: it means negatively harming the lives of children, not something to be taken lightly. For this reason, schools and teachers need to carefully distinguish a "reform" from just a "change."

C. When Is a Change a Reform and When Isnít a Change a Reform?

Logically the a person who really wants to change the schools in a positive manner need do nothing more than shop the marketplace of ideas and find the winners. As the examples from the business world show, however, that takes a lot of effort. Moreover, change advocates, would be "reformers" seldom exercise much caution. For that reason, a more rational person sits on the school reform scale somewhere between the two ends.

Figure 2: A Reasonable Position on School Reform

SCHOOL REFORM

Over-enthused Advocate Informed Skeptic Resistant Reactionary

{------------------------------------------??----------------------------------------------}

The informed skepticís degree of support or resistance relies on one thing only: results. This leads to a working definition of school reform: a school reform is a significant, positive change in a school.

Several dimensions of this definition bear explanation. First, obviously, not all changes constitute reforms. To show this graphically:

Figure 3: Change Versus Reform

Second, the definition functions on the school level. One schoolís failed change might be another schoolís dramatic reform. This takes into account the fact that teachers directly and principals actually interact with the students, and ample literature supports the conclusion that they, particularly the teachers, carry out the change or fail to do so.

The third important aspect of this definition is that, obviously, some mechanism must distinguish the failed changes from the reforms. An adopted failed change will, by definition, have negative effects.

Finally, the change must have significance. Logically, two kinds of change can take place: those involving the school climate and those involving student achievement. Some writers consider a good climate a necessary, but not sufficient, precondition for reform. This would require considerable side discussion. Briefly, the literature on school climate, using the Kettering Climate Index, shows that a school may have a good climate and limited learning. For the purposes of this paper, "reforms," then, can refer to changes in school climate or student achievement and ideally both.

Having established that reform must take place in the context of an individual school, itís necessary then to look at any school within the local context. The same scales drawn above could rank schools as well as individuals. The Chelsea school system, for example, "taken over" by Boston University, probably includes most schools near the advocacy end of the scale. DoDDs schools, in contrast, tend towards the opposite extreme.

D. Why DoDDs Schools Are Innately Conservative

On the surface DoDDs seem almost institutionalized to change. Fruit (1993) studied this issue in examining the social conditions of a DoDDs high school and found a curious contradiction: Though DoDDs possesses multiple means of forcing renewal of DoDDs schools, including a process of 5 year (DS2005.2, 1977) and 7 year reviews (DS2010.2, 1987), legislators and bureaucrats constantly worry about system stagnation (Oshinotu 1973 and Walling 1985). Moreover, several Congressional fact-finding missions in the 1970s and 1980s found those worries justified. Fruit (1993) found that several factors contributed to the fact that DoDDs schools more often suffer from a lack of innovation than over-experimentation:

(1) heavy bureaucratization and a well-developed set of rules

(2) an aging faculty group largely within 10 years of retirement

(3) isolation of individual schools from one another

(4) alienation of schools from host countries

(5) use of standardized textbooks, course offerings, and materials across the system

(6) increasing centralization via electronic control, i.e. "management by email"

(7) lack of movement by faculty within the system

As a result, quite unlike the international schools within an hourís drive of many DoDDs schools, the average DoDDs school suffers far more from a lack of experimentation than from "faddism."

DoDDS attempts to "mandate change," moreover failed because they did not take into account local conditions. The last three major programs introduced into the schools, for example, the ASAP, the Terra Nova, etc. all came from above. Further, they all came as California imports. As time goes on, then, DoDDs schools increasingly become alike and change decreasing amounts. This creates a cycle of "school improvement" that really leads to stagnation and conservatism. DoDDs-Washington imposes a new program. This excites only lukewarm teacher enthusiasm and consequently fails to make even a significant failure. Teachers become more conservative and change-resistant. This isnít entirely bad, of course. Students receive protection from some of the sillier changes (Fruit 1993). On the other hand, they seldom receive the benefits of trying programs that might help students learn.

E. Why Samurai Middle School Should Be Different

This author proposes, therefore, that, within the parameters of the body of regulations, Samurai Middle School act as an experiment to see how much improved learning can result from a school environment specifically designed to encourage and measure change.

Research by Odden (1995) found that successful schools rely heavily, not on externally imposed reforms, but on internal innovation. This re-enforces the famous study by Lortie (1977) that found that school teachers, by and large, see things from a personal, self-enclosed perspective. Accordingly, they show little interest in externally imposed solutions. The design of YMS, therefore, must encourage bottom-up, teacher-initiated change.

The research, further, supports that collaboration often results in changes that improve learning. Rosenholtz (1989) found a positive correlation between the amount of teacher sharing time

and the efficacy of the schools. In other words, more time spent together meant more student achievement and an attitude that produces, among teachers, the attitude that must learning continues throughout their career. Rosenholz found four factors positively correlated with "learning enriched schools" (p. 135):

(1) goal-setting activities that accentuate specific instructional objectives

(2) clear and frequent evaluations by principals, who identify specific improvement needs and monitor the progress teachers make in achieving them

(3) shared teaching goals that give legitimacy and support and create pressure to conform to norms of school renewal

(4) collaboration that at once enables and compels teachers to offer and request advice in helping each other improve instructionally.

The latter point bears elaboration. Roland S. Barth, a recognized expert on school renewal, emphasizes that a school must become a "community of learners" in which students engage in meaningful activities. This doesnít mean, however, that YMS should simply experiment wildly. The biggest experiment, should be an explicit model of experimentation (which itself could be changed). In order to prevent simply random experimentation, however, as indicated above YMS needs a clear set of goals.

F. Broad Goals as a Framework and Defined Curricula as a Given

In general, goals should center around four areas identified by both Barth (1997) and Newman as

essential. Note that the first three deal exclusively with measurable learning, the latter with school climate,

measurable as well. These goals include:

"help students to read and understand literacy andhistorical writing

help students to gain proficiency in mathematics and science

teach people to write well

teach students to treat people fairly." (Newman, p. 5)

All of these can be measured in terms of "value-added" (Newman 1997) meaning the school can set up means of measuring progress both through criterion-reference testing and authentic assessment. This also enables both a measuring of progress and a comparison to more traditionally defined DoDDs schools such as Kadena Middle School (KMS). and Lester Middle School.

Within this broadly-defined framework, YMS would encourage teachers to try new things and to work with each other. With a largely DoDDs-mandated curriculum, teacher experiment would center not on "what we want to do" but on "how can we do it and measure it."

Research shows that for effective change to take place teachers in a school must have a fairly unspecified set of goals, such as those outlined above and the freedom to experiment. By having YMS start out with broad, though measurable goals, and the DoDDs-mandated curriculum, this provides a broad framework within which teachers can try to separate mere proposed changes from true reforms. In order to do this in the most productive way, however, the school needs a process, the subject of the next section.

G. A Model for Continuous Change

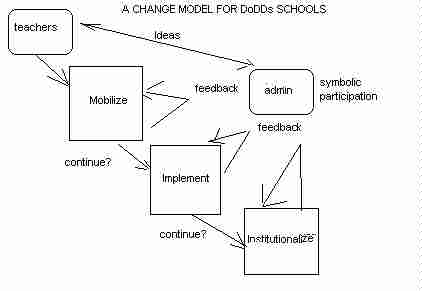

The following model should function as a means of coordinating change. The model proposes continuous improvement within three set stages: motivation, implementation, and institutionalization. It borrows heavily from the steps presented by Bill Gerritz (1997). The real inspiration, however, stems from the work of Edward Deming (Fellers 1992) on continuous improvement. Deming provided that, given sufficient feedback and encouragement, workers and their managers, working as a team, could continuously improve their efforts over time.

Mobilization

1. Check to make sure this change doesnít violate exis�ting regulations.2. Publish information in the newsletter for parents. 3. Research other schools who have used this change. 4. Read studies positive and negative about this change.5. Determine criteria for measuring success.6. Have a pilot group test this idea.7. Evaluate: Decide to adopt or not and how many teachers to involve. Implementation 1. Introduce this topic at faculty meeting. 2. Enlist influential teachers, if possible, in this change. 3. Provide more detailed information to parents as to the decisionís benefits and costs. 4. Have selected faculty implement this change. 5. Assess and publish report of gains/costs.6. Evaluate: Decide to institutionalize or not. Institutionalization 1. Incorporate this change in staff evaluations. 2. Establish a policy which explains in details how this change will work and how much it must be used. 3. Continue to assess gains/costs on an on-going basis: Continue or not? 4. Review goals and revise policies (if necessary). 5. Consider similar ideas suggested by success/failure of ts change

6. Assess incoming faculty in the light of the institutionalized change.

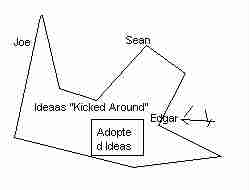

To put this into a more concrete form, consider the drawing below.

As the drawing indicates, changes go through an orderly process of review and adoption. At several points, moreover, the school can choose to discontinue, based on feedback. The method above purposely offers no timetable. This allows new ideas to take their own time to generate and gestate. The respective role of the administration and teachers bears further elaboration.

H. The Administrators and the Teachers

The terms for the administratorsí role "strategic" and "symbolic," suggest a purposely limited commitment in terms of time and attention. By "symbolic," this means that the principal offers encouragement and rewards to teachers involved in finding positive change. The term "strategic" implies that the administrator limits intervention and active participation to important moments instead of shepherding a pet project from start to finish. One key moment includes, as indicated above, evaluating personnel according to whether and how well they have adopted reforms the school has institutionalized.

Several reasons exist for a more limited role in change for the administrators than allocated by many past models for school change. First, ownership by teachers plays the fundamental role in whether a school change becomes a part of the school culture. Further (PTC 1997) principals do not have the time to be everywhere at once. The principal must, then, not only delegate, but choose to show up at the appropriate times. While the principal may participate in initial planning, the teachers should have the major responsibility.

The role of the teachers, in this model, is fundamental. Again, research shows (Fullan among others) that teachers do not "buy into" innovations in which they have no ownership. For that reason, the model above assumes that innovation start with teachers. The conception that this model embraces is one of an informal committee of teachers, specifically not a "schoolimprovement committee," something more like a "teachersí lunch group," that meets together, say, once or twice a week.

This proposal comes for two important reasons. First, DoDDs already suffers from a plethora of similar "reform" committees all of which, in the bureaucratic manner above, become mere obligations linked to mandatory attendance and generated pieces of paper. They become fossilized.

A proper conception for this committee might be more like a "quality circle," ala Demmings or even a government "think tank." Members would meet informally and ideally membership would vary with the idea. Nor would the committee issue any reports per se, especially in the planning stages. Members, by design, would come and go as their favored issues appeared or disappeared from the horizon. Ideally, administrative participation would occur occasionally, again, as ideas came to a particular administrator. If this sounds diametrically opposed to typical DoDDs culture, that is no accident. This model purposely proposes to somewhat overcompensate for the inherent stagnation outlined above.

When ideas neared the implementation stage, outputs and feedback would take on a more formal cast. Obviously, at the institutionalization stage, adopting would take on the familiar DoDDs look of memorandums, minutes, etc. but only for the best ideas would reach this stage of development. Further, this process tunes institutionalization to the school, not the system, though naturally YMS would publish information about its "keepers." By the point of institutionalization, an adopted program would enjoy some teacher support and have a body of data showing success. Again, a graphic probably best illustrates a typical meeting of this committee.

Here the ideas largely float around in a relatively chaotic manner that a drawing of circles and lines can only suggest. A small idea of adopted programs, the "keepers," become part of the school culture. The rest, the failed experiments, the ideas shot around the table a couple of times, die a natural death. If this model, itself, seems a bit chaotic, the model itself can be improved, updated, and personalized as time goes on and personnel changes.

I. Conclusion

In conclusion, this model of change would help first YMS and hopefully, later DoDDs. By deformalizing the change process, it hopes to rid the school of some of the inherent rigidity that the DoDDs system tends to instill. By its dynamism, it hopes to bring change to a school in immanent danger of becoming merely another lifeless clone of other DoDDs middle schools. Further, this model uses what we know from the existing literature to not just make one change in a school but create in an environment in which change becomes no longer feared but analyzed and, if proven, applied. This process will change not only YMS, but serve as a model for how real reform within the entire DoDDs system.

Bibliography

(Note: DS and DSPAR refer to government documents).

Barth, Roland S. "Productive School Renewel," Internet abstract, 1997

Clinton, Bill. March 13, speech to the North Carolina State Legislature as Quoted by Lilian Gringes, Volume I, Issue 4, 1997, p. 1.

DS 2000.5 June 14, 1978. "Department of Defense Dependent Schools Curriculum Development Program."

DS 2010.1 "Department of Defense Dependents Schools Accreditation Program." December 18, 1987.

Fellers, Gary. The Deming Vision: SPC/TQM for Administrators. Milwaukee ASQC Quality Press: 1992.

Fruit, D. The Effect of Social Environment on DoDDs Pacific Schools: Or the Little Company Town With the C-130s. Unpublished paper for Troy State University Class: Social Foundations of Higher Education, 1993.

Fullan, Michael G. with Suzanne Stiegelbauer. The New Meaning of Educational Change. Jossey-Bass:1991.

Gerritz, Bill (1997) "Handout to ISTI Class Students." July 15, 1997.

Lockwood, Anne Turnbaugh. "Making Schools Productive." from Leaders for Tomorrowís Schools, North Cental Regional Educational Laboratory, Fall 1996, Vol. 3.

Lortie, Dan. School Teacher: A Psychological Study. Chicago: University of Chicago Press: 1977.

Newman, Fred M. Newmann: "Success, Not Productivity.: Internet retrieved document, 1997.

Odden, Allan and William Clune. "Improving Educational Productivity and School Finance." Educational Researcher, Vol. 24, No. 9, 1997, pp. 6-10, 22.

Oshirto, Yoshinobu. Historical Development of the Defense Schools With Emphasis on Japan, Far-East-Pacific Area, 1946-1973. Unpublished Dissertation in Curriculum Development and Supervision (Doctor of Education). Logan Utah: Utah State

University, 1973.

PTC . "Social Context and School Change: Presentation Narrative," PTC-Creating an Effective School, pp. 106-110.

Raringtogo, Dr. "Speech and Question and Answer Session" at DoDDs Japan Educatorsí Day, 1996.

Rosenholz, Susan J. "Teachersí Workplace: The Social Organization of Schools." New York: Longman, 1989.

Schlechty, Phillip. "On the Frontier of Reform with Trailblazers, Pioneers, and Settlers."

Walling, Donovan R. "America's Overseas School System." Phi Delta Kappan

, February 1985, pp. 424-425.